:)

[DL Specialization] C2W2A1 본문

Optimization

Gradient Descent

import numpy as np

import matplotlib.pyplot as plt

import scipy.io

import math

import sklearn

import sklearn.datasets

from opt_utils_v1a import load_params_and_grads, initialize_parameters, forward_propagation, backward_propagation

from opt_utils_v1a import compute_cost, predict, predict_dec, plot_decision_boundary, load_dataset

from copy import deepcopy

from testCases import *

from public_tests import *

%matplotlib inline

plt.rcParams['figure.figsize'] = (7.0, 4.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

%load_ext autoreload

%autoreload 2def update_parameters_with_gd(parameters, grads, learning_rate):

L = len(parameters) // 2 # number of layers in the neural networks

# Update rule for each parameter

for l in range(1, L + 1):

parameters["W" + str(l)] -= learning_rate * grads["dW" + str(l)]

parameters["b" + str(l)] -= learning_rate * grads["db" + str(l)]

return parametersparameters, grads, learning_rate = update_parameters_with_gd_test_case()

learning_rate = 0.01

parameters = update_parameters_with_gd(parameters, grads, learning_rate)

print("W1 =\n" + str(parameters["W1"]))

print("b1 =\n" + str(parameters["b1"]))

print("W2 =\n" + str(parameters["W2"]))

print("b2 =\n" + str(parameters["b2"]))

update_parameters_with_gd_test(update_parameters_with_gd)W1 =

[[ 1.63312395 -0.61217855 -0.5339999 ]

[-1.06196243 0.85396039 -2.3105546 ]]

b1 =

[[ 1.73978682]

[-0.77021546]]

W2 =

[[ 0.32587637 -0.24814147]

[ 1.47146563 -2.05746183]

[-0.32772076 -0.37713775]]

b2 =

[[ 1.13773698]

[-1.09301954]

[-0.16397615]]

Mini-Batch Gradient Descent

def random_mini_batches(X, Y, mini_batch_size = 64, seed = 0):

np.random.seed(seed)

m = X.shape[1]

mini_batches = []

# Step 1: Shuffle (X, Y)

permutation = list(np.random.permutation(m))

shuffled_X = X[:, permutation]

shuffled_Y = Y[:, permutation].reshape((1, m))

inc = mini_batch_size

# Step 2 - Partition (shuffled_X, shuffled_Y).

# Cases with a complete mini batch size only i.e each of 64 examples.

num_complete_minibatches = math.floor(m / mini_batch_size) # number of mini batches of size mini_batch_size in your partitionning

for k in range(0, num_complete_minibatches):

mini_batch_X = shuffled_X[:, k * mini_batch_size : (k + 1) * mini_batch_size]

mini_batch_Y = shuffled_Y[:, k * mini_batch_size : (k + 1) * mini_batch_size]

mini_batch = (mini_batch_X, mini_batch_Y)

mini_batches.append(mini_batch)

# For handling the end case (last mini-batch < mini_batch_size i.e less than 64)

if m % mini_batch_size != 0:

mini_batch_X = shuffled_X[:, num_complete_minibatches * mini_batch_size : m]

mini_batch_Y = shuffled_Y[:, num_complete_minibatches * mini_batch_size : m]

mini_batch = (mini_batch_X, mini_batch_Y)

mini_batches.append(mini_batch)

return mini_batchesnp.random.seed(1)

mini_batch_size = 64

nx = 12288

m = 148

X = np.array([x for x in range(nx * m)]).reshape((m, nx)).T

Y = np.random.randn(1, m) < 0.5

mini_batches = random_mini_batches(X, Y, mini_batch_size)

n_batches = len(mini_batches)

assert n_batches == math.ceil(m / mini_batch_size), f"Wrong number of mini batches. {n_batches} != {math.ceil(m / mini_batch_size)}"

for k in range(n_batches - 1):

assert mini_batches[k][0].shape == (nx, mini_batch_size), f"Wrong shape in {k} mini batch for X"

assert mini_batches[k][1].shape == (1, mini_batch_size), f"Wrong shape in {k} mini batch for Y"

assert np.sum(np.sum(mini_batches[k][0] - mini_batches[k][0][0], axis=0)) == ((nx * (nx - 1) / 2 ) * mini_batch_size), "Wrong values. It happens if the order of X rows(features) changes"

if ( m % mini_batch_size > 0):

assert mini_batches[n_batches - 1][0].shape == (nx, m % mini_batch_size), f"Wrong shape in the last minibatch. {mini_batches[n_batches - 1][0].shape} != {(nx, m % mini_batch_size)}"

assert np.allclose(mini_batches[0][0][0][0:3], [294912, 86016, 454656]), "Wrong values. Check the indexes used to form the mini batches"

assert np.allclose(mini_batches[-1][0][-1][0:3], [1425407, 1769471, 897023]), "Wrong values. Check the indexes used to form the mini batches"t_X, t_Y, mini_batch_size = random_mini_batches_test_case()

mini_batches = random_mini_batches(t_X, t_Y, mini_batch_size)

print ("shape of the 1st mini_batch_X: " + str(mini_batches[0][0].shape))

print ("shape of the 2nd mini_batch_X: " + str(mini_batches[1][0].shape))

print ("shape of the 3rd mini_batch_X: " + str(mini_batches[2][0].shape))

print ("shape of the 1st mini_batch_Y: " + str(mini_batches[0][1].shape))

print ("shape of the 2nd mini_batch_Y: " + str(mini_batches[1][1].shape))

print ("shape of the 3rd mini_batch_Y: " + str(mini_batches[2][1].shape))

print ("mini batch sanity check: " + str(mini_batches[0][0][0][0:3]))

random_mini_batches_test(random_mini_batches)shape of the 1st mini_batch_X: (12288, 64)

shape of the 2nd mini_batch_X: (12288, 64)

shape of the 3rd mini_batch_X: (12288, 20)

shape of the 1st mini_batch_Y: (1, 64)

shape of the 2nd mini_batch_Y: (1, 64)

shape of the 3rd mini_batch_Y: (1, 20)

mini batch sanity check: [ 0.90085595 -0.7612069 0.2344157 ]

Momentum

def initialize_velocity(parameters):

L = len(parameters) // 2

v = {}

# Initialize velocity

for l in range(1, L + 1):

v["dW" + str(l)] = np.zeros_like(parameters["W" + str(l)])

v["db" + str(l)] = np.zeros_like(parameters["b" + str(l)])

return vparameters = initialize_velocity_test_case()

v = initialize_velocity(parameters)

print("v[\"dW1\"] =\n" + str(v["dW1"]))

print("v[\"db1\"] =\n" + str(v["db1"]))

print("v[\"dW2\"] =\n" + str(v["dW2"]))

print("v[\"db2\"] =\n" + str(v["db2"]))

initialize_velocity_test(initialize_velocity)v["dW1"] =

[[0. 0.]

[0. 0.]

[0. 0.]]

v["db1"] =

[[0.]

[0.]

[0.]]

v["dW2"] =

[[0. 0. 0.]

[0. 0. 0.]

[0. 0. 0.]]

v["db2"] =

[[0.]

[0.]

[0.]]

def update_parameters_with_momentum(parameters, grads, v, beta, learning_rate):

L = len(parameters) // 2

# Momentum update for each parameter

for l in range(1, L + 1):

v["dW" + str(l)] = beta * v["dW" + str(l)] + (1 - beta) * grads["dW" + str(l)]

v["db" + str(l)] = beta * v["db" + str(l)] + (1 - beta) * grads["db" + str(l)]

parameters["W" + str(l)] -= learning_rate * v["dW" + str(l)]

parameters["b" + str(l)] -= learning_rate * v["db" + str(l)]

return parameters, vparameters, grads, v = update_parameters_with_momentum_test_case()

parameters, v = update_parameters_with_momentum(parameters, grads, v, beta = 0.9, learning_rate = 0.01)

print("W1 = \n" + str(parameters["W1"]))

print("b1 = \n" + str(parameters["b1"]))

print("W2 = \n" + str(parameters["W2"]))

print("b2 = \n" + str(parameters["b2"]))

print("v[\"dW1\"] = \n" + str(v["dW1"]))

print("v[\"db1\"] = \n" + str(v["db1"]))

print("v[\"dW2\"] = \n" + str(v["dW2"]))

print("v[\"db2\"] = v" + str(v["db2"]))

update_parameters_with_momentum_test(update_parameters_with_momentum)W1 =

[[ 1.62522322 -0.61179863 -0.52875457]

[-1.071868 0.86426291 -2.30244029]]

b1 =

[[ 1.74430927]

[-0.76210776]]

W2 =

[[ 0.31972282 -0.24924749]

[ 1.46304371 -2.05987282]

[-0.32294756 -0.38336269]]

b2 =

[[ 1.1341662 ]

[-1.09920409]

[-0.171583 ]]

v["dW1"] =

[[-0.08778584 0.00422137 0.05828152]

[-0.11006192 0.11447237 0.09015907]]

v["db1"] =

[[0.05024943]

[0.09008559]]

v["dW2"] =

[[-0.06837279 -0.01228902]

[-0.09357694 -0.02678881]

[ 0.05303555 -0.06916608]]

v["db2"] = v[[-0.03967535]

[-0.06871727]

[-0.08452056]]

Adam

def initialize_adam(parameters) :

L = len(parameters) // 2 # number of layers in the neural networks

v = {}

s = {}

# Initialize v, s. Input: "parameters". Outputs: "v, s".

for l in range(1, L + 1):

v["dW" + str(l)] = np.zeros_like(parameters["W" + str(l)])

v["db" + str(l)] = np.zeros_like(parameters["b" + str(l)])

s["dW" + str(l)] = np.zeros_like(parameters["W" + str(l)])

s["db" + str(l)] = np.zeros_like(parameters["b" + str(l)])

return v, sparameters = initialize_adam_test_case()

v, s = initialize_adam(parameters)

print("v[\"dW1\"] = \n" + str(v["dW1"]))

print("v[\"db1\"] = \n" + str(v["db1"]))

print("v[\"dW2\"] = \n" + str(v["dW2"]))

print("v[\"db2\"] = \n" + str(v["db2"]))

print("s[\"dW1\"] = \n" + str(s["dW1"]))

print("s[\"db1\"] = \n" + str(s["db1"]))

print("s[\"dW2\"] = \n" + str(s["dW2"]))

print("s[\"db2\"] = \n" + str(s["db2"]))

initialize_adam_test(initialize_adam)v["dW1"] =

[[0. 0. 0.]

[0. 0. 0.]]

v["db1"] =

[[0.]

[0.]]

v["dW2"] =

[[0. 0.]

[0. 0.]

[0. 0.]]

v["db2"] =

[[0.]

[0.]

[0.]]

s["dW1"] =

[[0. 0. 0.]

[0. 0. 0.]]

s["db1"] =

[[0.]

[0.]]

s["dW2"] =

[[0. 0.]

[0. 0.]

[0. 0.]]

s["db2"] =

[[0.]

[0.]

[0.]]

def update_parameters_with_adam(parameters, grads, v, s, t, learning_rate = 0.01,

beta1 = 0.9, beta2 = 0.999, epsilon = 1e-8):

L = len(parameters) // 2 # number of layers in the neural networks

v_corrected = {} # Initializing first moment estimate, python dictionary

s_corrected = {} # Initializing second moment estimate, python dictionary

# Perform Adam update on all parameters

for l in range(1, L + 1):

v["dW" + str(l)] = beta1 * v["dW" + str(l)] + (1 - beta1) * grads["dW" + str(l)]

v["db" + str(l)] = beta1 * v["db" + str(l)] + (1 - beta1) * grads["db" + str(l)]

# Compute bias-corrected first moment estimate. Inputs: "v, beta1, t". Output: "v_corrected".

v_corrected["dW" + str(l)] = v["dW" + str(l)] / (1 - beta1 ** t)

v_corrected["db" + str(l)] = v["db" + str(l)] / (1 - beta1 ** t)

# Moving average of the squared gradients. Inputs: "s, grads, beta2". Output: "s".

s["dW" + str(l)] = beta2 * s["dW" + str(l)] + (1 - beta2) * (grads["dW" + str(l)] ** 2)

s["db" + str(l)] = beta2 * s["db" + str(l)] + (1 - beta2) * (grads["db" + str(l)] ** 2)

# Compute bias-corrected second raw moment estimate. Inputs: "s, beta2, t". Output: "s_corrected".

s_corrected["dW" + str(l)] = s["dW" + str(l)] / (1 - beta2 ** t)

s_corrected["db" + str(l)] = s["db" + str(l)] / (1 - beta2 ** t)

# Update parameters. Inputs: "parameters, learning_rate, v_corrected, s_corrected, epsilon". Output: "parameters".

parameters["W" + str(l)] -= learning_rate * (v_corrected["dW" + str(l)] / (np.sqrt(s_corrected["dW" + str(l)]) + epsilon))

parameters["b" + str(l)] -= learning_rate * (v_corrected["db" + str(l)] / (np.sqrt(s_corrected["db" + str(l)]) + epsilon))

return parameters, v, s, v_corrected, s_correctedparametersi, grads, vi, si, t, learning_rate, beta1, beta2, epsilon = update_parameters_with_adam_test_case()

parameters, v, s, vc, sc = update_parameters_with_adam(parametersi, grads, vi, si, t, learning_rate, beta1, beta2, epsilon)

print(f"W1 = \n{parameters['W1']}")

print(f"W2 = \n{parameters['W2']}")

print(f"b1 = \n{parameters['b1']}")

print(f"b2 = \n{parameters['b2']}")

update_parameters_with_adam_test(update_parameters_with_adam)W1 =

[[ 1.63937725 -0.62327448 -0.54308727]

[-1.0578897 0.85032154 -2.31657668]]

W2 =

[[ 0.33400549 -0.23563857]

[ 1.47715417 -2.04561842]

[-0.33729882 -0.36908457]]

b1 =

[[ 1.72995096]

[-0.7762447 ]]

b2 =

[[ 1.14852557]

[-1.08492339]

[-0.15740527]]

Model with different Optimization algorithms

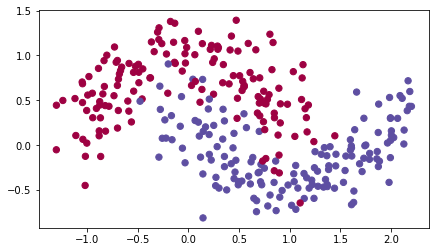

train_X, train_Y = load_dataset()

def model(X, Y, layers_dims, optimizer, learning_rate = 0.0007, mini_batch_size = 64, beta = 0.9,

beta1 = 0.9, beta2 = 0.999, epsilon = 1e-8, num_epochs = 5000, print_cost = True):

L = len(layers_dims) # number of layers in the neural networks

costs = [] # to keep track of the cost

t = 0 # initializing the counter required for Adam update

seed = 10 # For grading purposes, so that your "random" minibatches are the same as ours

m = X.shape[1] # number of training examples

# Initialize parameters

parameters = initialize_parameters(layers_dims)

# Initialize the optimizer

if optimizer == "gd":

pass # no initialization required for gradient descent

elif optimizer == "momentum":

v = initialize_velocity(parameters)

elif optimizer == "adam":

v, s = initialize_adam(parameters)

# Optimization loop

for i in range(num_epochs):

# Define the random minibatches. We increment the seed to reshuffle differently the dataset after each epoch

seed = seed + 1

minibatches = random_mini_batches(X, Y, mini_batch_size, seed)

cost_total = 0

for minibatch in minibatches:

# Select a minibatch

(minibatch_X, minibatch_Y) = minibatch

# Forward propagation

a3, caches = forward_propagation(minibatch_X, parameters)

# Compute cost and add to the cost total

cost_total += compute_cost(a3, minibatch_Y)

# Backward propagation

grads = backward_propagation(minibatch_X, minibatch_Y, caches)

# Update parameters

if optimizer == "gd":

parameters = update_parameters_with_gd(parameters, grads, learning_rate)

elif optimizer == "momentum":

parameters, v = update_parameters_with_momentum(parameters, grads, v, beta, learning_rate)

elif optimizer == "adam":

t = t + 1 # Adam counter

parameters, v, s, _, _ = update_parameters_with_adam(parameters, grads, v, s,

t, learning_rate, beta1, beta2, epsilon)

cost_avg = cost_total / m

# Print the cost every 1000 epoch

if print_cost and i % 1000 == 0:

print ("Cost after epoch %i: %f" %(i, cost_avg))

if print_cost and i % 100 == 0:

costs.append(cost_avg)

# plot the cost

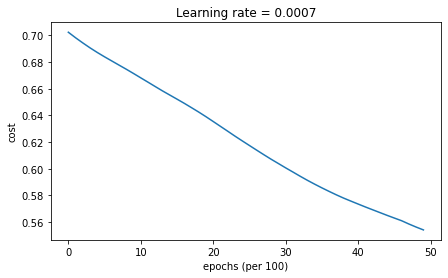

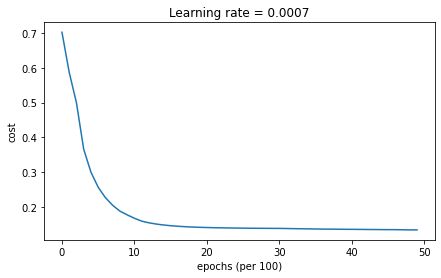

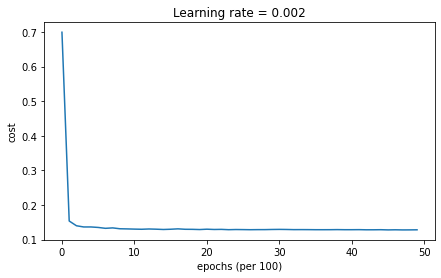

plt.plot(costs)

plt.ylabel('cost')

plt.xlabel('epochs (per 100)')

plt.title("Learning rate = " + str(learning_rate))

plt.show()

return parameters

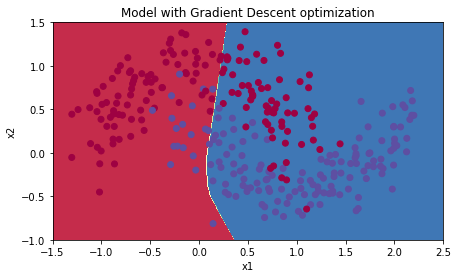

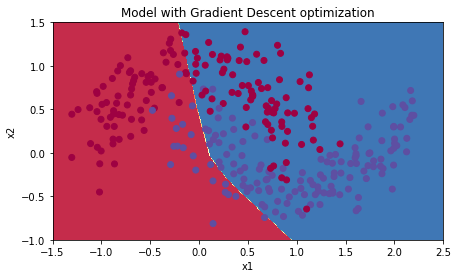

# train 3-layer model

layers_dims = [train_X.shape[0], 5, 2, 1]

parameters = model(train_X, train_Y, layers_dims, optimizer = "gd")

# Predict

predictions = predict(train_X, train_Y, parameters)

# Plot decision boundary

plt.title("Model with Gradient Descent optimization")

axes = plt.gca()

axes.set_xlim([-1.5,2.5])

axes.set_ylim([-1,1.5])

plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)Cost after epoch 0: 0.702405

Cost after epoch 1000: 0.668101

Cost after epoch 2000: 0.635288

Cost after epoch 3000: 0.600491

Cost after epoch 4000: 0.573367

Accuracy: 0.7166666666666667

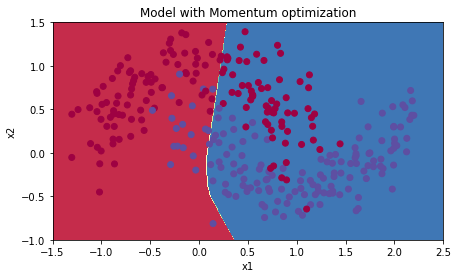

# train 3-layer model

layers_dims = [train_X.shape[0], 5, 2, 1]

parameters = model(train_X, train_Y, layers_dims, beta = 0.9, optimizer = "momentum")

# Predict

predictions = predict(train_X, train_Y, parameters)

# Plot decision boundary

plt.title("Model with Momentum optimization")

axes = plt.gca()

axes.set_xlim([-1.5,2.5])

axes.set_ylim([-1,1.5])

plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)Cost after epoch 0: 0.702413

Cost after epoch 1000: 0.668167

Cost after epoch 2000: 0.635388

Cost after epoch 3000: 0.600591

Cost after epoch 4000: 0.573444

Accuracy: 0.7166666666666667

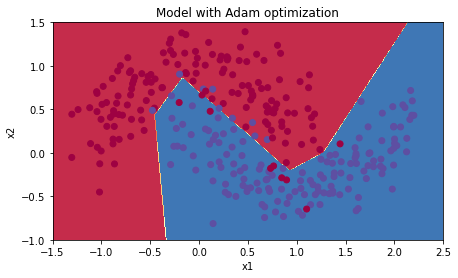

# train 3-layer model

layers_dims = [train_X.shape[0], 5, 2, 1]

parameters = model(train_X, train_Y, layers_dims, optimizer = "adam")

# Predict

predictions = predict(train_X, train_Y, parameters)

# Plot decision boundary

plt.title("Model with Adam optimization")

axes = plt.gca()

axes.set_xlim([-1.5,2.5])

axes.set_ylim([-1,1.5])

plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)Cost after epoch 0: 0.702166

Cost after epoch 1000: 0.167845

Cost after epoch 2000: 0.141316

Cost after epoch 3000: 0.138788

Cost after epoch 4000: 0.136066

Accuracy: 0.9433333333333334

Learning Rate Decay & Scheduling

def model(X, Y, layers_dims, optimizer, learning_rate = 0.0007, mini_batch_size = 64, beta = 0.9,

beta1 = 0.9, beta2 = 0.999, epsilon = 1e-8, num_epochs = 5000, print_cost = True, decay=None, decay_rate=1):

L = len(layers_dims) # number of layers in the neural networks

costs = [] # to keep track of the cost

t = 0 # initializing the counter required for Adam update

seed = 10 # For grading purposes, so that your "random" minibatches are the same as ours

m = X.shape[1] # number of training examples

lr_rates = []

learning_rate0 = learning_rate # the original learning rate

# Initialize parameters

parameters = initialize_parameters(layers_dims)

# Initialize the optimizer

if optimizer == "gd":

pass # no initialization required for gradient descent

elif optimizer == "momentum":

v = initialize_velocity(parameters)

elif optimizer == "adam":

v, s = initialize_adam(parameters)

# Optimization loop

for i in range(num_epochs):

# Define the random minibatches. We increment the seed to reshuffle differently the dataset after each epoch

seed = seed + 1

minibatches = random_mini_batches(X, Y, mini_batch_size, seed)

cost_total = 0

for minibatch in minibatches:

# Select a minibatch

(minibatch_X, minibatch_Y) = minibatch

# Forward propagation

a3, caches = forward_propagation(minibatch_X, parameters)

# Compute cost and add to the cost total

cost_total += compute_cost(a3, minibatch_Y)

# Backward propagation

grads = backward_propagation(minibatch_X, minibatch_Y, caches)

# Update parameters

if optimizer == "gd":

parameters = update_parameters_with_gd(parameters, grads, learning_rate)

elif optimizer == "momentum":

parameters, v = update_parameters_with_momentum(parameters, grads, v, beta, learning_rate)

elif optimizer == "adam":

t = t + 1 # Adam counter

parameters, v, s, _, _ = update_parameters_with_adam(parameters, grads, v, s,

t, learning_rate, beta1, beta2, epsilon)

cost_avg = cost_total / m

if decay:

learning_rate = decay(learning_rate0, i, decay_rate)

# Print the cost every 1000 epoch

if print_cost and i % 1000 == 0:

print ("Cost after epoch %i: %f" %(i, cost_avg))

if decay:

print("learning rate after epoch %i: %f"%(i, learning_rate))

if print_cost and i % 100 == 0:

costs.append(cost_avg)

# plot the cost

plt.plot(costs)

plt.ylabel('cost')

plt.xlabel('epochs (per 100)')

plt.title("Learning rate = " + str(learning_rate))

plt.show()

return parametersdef update_lr(learning_rate0, epoch_num, decay_rate):

learning_rate = learning_rate0 / (1 + decay_rate * epoch_num)

return learning_ratelearning_rate = 0.5

print("Original learning rate: ", learning_rate)

epoch_num = 2

decay_rate = 1

learning_rate_2 = update_lr(learning_rate, epoch_num, decay_rate)

print("Updated learning rate: ", learning_rate_2)

update_lr_test(update_lr)Original learning rate: 0.5

Updated learning rate: 0.16666666666666666# train 3-layer model

layers_dims = [train_X.shape[0], 5, 2, 1]

parameters = model(train_X, train_Y, layers_dims, optimizer = "gd", learning_rate = 0.1, num_epochs=5000, decay=update_lr)

# Predict

predictions = predict(train_X, train_Y, parameters)

# Plot decision boundary

plt.title("Model with Gradient Descent optimization")

axes = plt.gca()

axes.set_xlim([-1.5,2.5])

axes.set_ylim([-1,1.5])

plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)Cost after epoch 0: 0.701091

learning rate after epoch 0: 0.100000

Cost after epoch 1000: 0.661884

learning rate after epoch 1000: 0.000100

Cost after epoch 2000: 0.658620

learning rate after epoch 2000: 0.000050

Cost after epoch 3000: 0.656765

learning rate after epoch 3000: 0.000033

Cost after epoch 4000: 0.655486

learning rate after epoch 4000: 0.000025

Accuracy: 0.6533333333333333

def schedule_lr_decay(learning_rate0, epoch_num, decay_rate, time_interval=1000):

learning_rate = learning_rate0 / (1 + decay_rate * (epoch_num // time_interval))

return learning_ratelearning_rate = 0.5

print("Original learning rate: ", learning_rate)

epoch_num_1 = 10

epoch_num_2 = 100

decay_rate = 0.3

time_interval = 100

learning_rate_1 = schedule_lr_decay(learning_rate, epoch_num_1, decay_rate, time_interval)

learning_rate_2 = schedule_lr_decay(learning_rate, epoch_num_2, decay_rate, time_interval)

print("Updated learning rate after {} epochs: ".format(epoch_num_1), learning_rate_1)

print("Updated learning rate after {} epochs: ".format(epoch_num_2), learning_rate_2)

schedule_lr_decay_test(schedule_lr_decay)Original learning rate: 0.5

Updated learning rate after 10 epochs: 0.5

Updated learning rate after 100 epochs: 0.3846153846153846

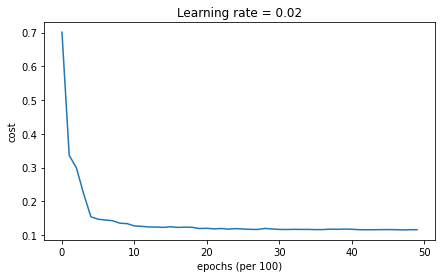

# train 3-layer model

layers_dims = [train_X.shape[0], 5, 2, 1]

parameters = model(train_X, train_Y, layers_dims, optimizer = "gd", learning_rate = 0.1, num_epochs=5000, decay=schedule_lr_decay)

# Predict

predictions = predict(train_X, train_Y, parameters)

# Plot decision boundary

plt.title("Model with Gradient Descent optimization")

axes = plt.gca()

axes.set_xlim([-1.5,2.5])

axes.set_ylim([-1,1.5])

plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)Cost after epoch 0: 0.701091

learning rate after epoch 0: 0.100000

Cost after epoch 1000: 0.127161

learning rate after epoch 1000: 0.050000

Cost after epoch 2000: 0.120304

learning rate after epoch 2000: 0.033333

Cost after epoch 3000: 0.117033

learning rate after epoch 3000: 0.025000

Cost after epoch 4000: 0.117512

learning rate after epoch 4000: 0.020000

Accuracy: 0.9433333333333334

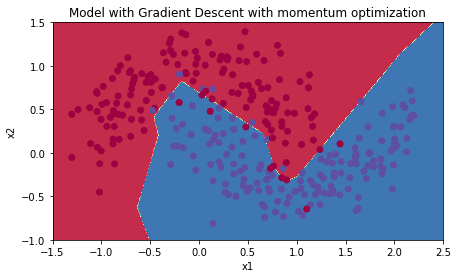

# train 3-layer model

layers_dims = [train_X.shape[0], 5, 2, 1]

parameters = model(train_X, train_Y, layers_dims, optimizer = "momentum", learning_rate = 0.1, num_epochs=5000, decay=schedule_lr_decay)

# Predict

predictions = predict(train_X, train_Y, parameters)

# Plot decision boundary

plt.title("Model with Gradient Descent with momentum optimization")

axes = plt.gca()

axes.set_xlim([-1.5,2.5])

axes.set_ylim([-1,1.5])

plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)Cost after epoch 0: 0.702226

learning rate after epoch 0: 0.100000

Cost after epoch 1000: 0.128974

learning rate after epoch 1000: 0.050000

Cost after epoch 2000: 0.125965

learning rate after epoch 2000: 0.033333

Cost after epoch 3000: 0.123375

learning rate after epoch 3000: 0.025000

Cost after epoch 4000: 0.123218

learning rate after epoch 4000: 0.020000

Accuracy: 0.9533333333333334

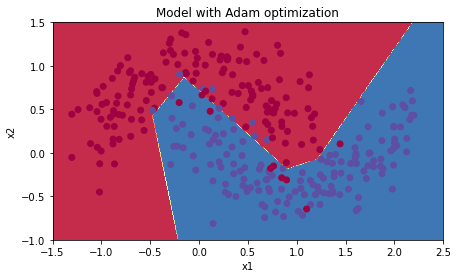

# train 3-layer model

layers_dims = [train_X.shape[0], 5, 2, 1]

parameters = model(train_X, train_Y, layers_dims, optimizer = "adam", learning_rate = 0.01, num_epochs=5000, decay=schedule_lr_decay)

# Predict

predictions = predict(train_X, train_Y, parameters)

# Plot decision boundary

plt.title("Model with Adam optimization")

axes = plt.gca()

axes.set_xlim([-1.5,2.5])

axes.set_ylim([-1,1.5])

plot_decision_boundary(lambda x: predict_dec(parameters, x.T), train_X, train_Y)Cost after epoch 0: 0.699346

learning rate after epoch 0: 0.010000

Cost after epoch 1000: 0.130074

learning rate after epoch 1000: 0.005000

Cost after epoch 2000: 0.129826

learning rate after epoch 2000: 0.003333

Cost after epoch 3000: 0.129282

learning rate after epoch 3000: 0.002500

Cost after epoch 4000: 0.128361

learning rate after epoch 4000: 0.002000

Accuracy: 0.94

'Coursera' 카테고리의 다른 글

| [DL Specialization] C2W3A1 (1) | 2024.09.17 |

|---|---|

| [DL Specialization] C2W1A3 (0) | 2024.09.14 |

| [DL Specialization] C2W1A2 (0) | 2024.09.14 |

| [DL Specialization] C2W1A1 (1) | 2024.09.07 |

| [DL Specialization] C1W4A2 (0) | 2024.08.30 |